Evaluating agent performance is harder than evaluating models. After developing evaluation frameworks for 10+ agent systems, I’ve learned what metrics matter and how to test effectively. Here’s the complete guide to evaluating agent performance.

Why Agent Evaluation is Different

Agent evaluation is more complex than model evaluation:

- Multi-step reasoning: Agents make multiple decisions, not just one prediction

- Stateful behavior: Actions depend on previous states and decisions

- Tool usage: Agents interact with external tools and APIs

- Error propagation: Early errors affect later steps

- Non-deterministic: Same input can produce different outputs

After implementing comprehensive evaluation for our research agent, we caught 40% more issues before production and improved success rate by 35%.

Key Evaluation Metrics

1. Task Completion Rate

Percentage of tasks completed successfully:

from typing import List, Dict, Any

from datetime import datetime

class TaskCompletionEvaluator:

def __init__(self):

self.completed_tasks = 0

self.failed_tasks = 0

self.task_history = []

def evaluate_task_completion(self, agent, test_tasks: List[Dict]) -> Dict:

# Evaluate task completion across test suite

results = {

"total_tasks": len(test_tasks),

"completed": 0,

"failed": 0,

"partial": 0,

"task_details": []

}

for task in test_tasks:

try:

result = agent.execute(task["input"])

success = self._is_successful(result, task.get("expected_output"))

task_result = {

"task_id": task.get("id"),

"input": task["input"],

"output": result,

"expected": task.get("expected_output"),

"success": success,

"completion_score": self._calculate_completion_score(result, task)

}

if success:

results["completed"] += 1

elif task_result["completion_score"] > 0.5:

results["partial"] += 1

else:

results["failed"] += 1

results["task_details"].append(task_result)

except Exception as e:

results["failed"] += 1

results["task_details"].append({

"task_id": task.get("id"),

"error": str(e),

"success": False

})

# Calculate metrics

results["completion_rate"] = results["completed"] / results["total_tasks"] if results["total_tasks"] > 0 else 0

results["partial_completion_rate"] = results["partial"] / results["total_tasks"] if results["total_tasks"] > 0 else 0

results["failure_rate"] = results["failed"] / results["total_tasks"] if results["total_tasks"] > 0 else 0

return results

def _is_successful(self, result: Any, expected: Any) -> bool:

# Check if result matches expected output

if expected is None:

# If no expected output, check if result is not None

return result is not None

# Simple equality check (can be enhanced with semantic similarity)

return result == expected

def _calculate_completion_score(self, result: Any, task: Dict) -> float:

# Calculate partial completion score (0.0 to 1.0)

expected = task.get("expected_output")

if expected is None:

return 1.0 if result is not None else 0.0

# In production, use semantic similarity or custom scoring

# For now, simple check

if result == expected:

return 1.0

elif result is not None:

return 0.5 # Partial completion

else:

return 0.0

# Usage

evaluator = TaskCompletionEvaluator()

test_tasks = [

{"id": "task1", "input": "Research AI trends", "expected_output": "Research report"},

{"id": "task2", "input": "Analyze data", "expected_output": "Analysis results"}

]

results = evaluator.evaluate_task_completion(agent, test_tasks)

print(f"Completion rate: {results['completion_rate']:.2%}")

2. Accuracy and Correctness

Measure correctness of agent outputs:

from typing import Callable, Optional, List, Dict, Any

import numpy as np

class AccuracyEvaluator:

def __init__(self):

self.correct_predictions = 0

self.total_predictions = 0

def evaluate_accuracy(self, agent, test_cases: List[Dict],

validation_fn: Optional[Callable] = None) -> Dict:

# Evaluate accuracy of agent outputs

results = {

"correct": 0,

"incorrect": 0,

"total": len(test_cases),

"accuracy": 0.0,

"details": []

}

for test_case in test_cases:

input_data = test_case["input"]

expected = test_case.get("expected")

try:

output = agent.execute(input_data)

# Use custom validation function if provided

if validation_fn:

is_correct = validation_fn(output, expected)

else:

is_correct = self._default_validation(output, expected)

if is_correct:

results["correct"] += 1

else:

results["incorrect"] += 1

results["details"].append({

"input": input_data,

"output": output,

"expected": expected,

"correct": is_correct

})

except Exception as e:

results["incorrect"] += 1

results["details"].append({

"input": input_data,

"error": str(e),

"correct": False

})

results["accuracy"] = results["correct"] / results["total"] if results["total"] > 0 else 0.0

return results

def _default_validation(self, output: Any, expected: Any) -> bool:

# Default validation (exact match)

return output == expected

# Usage with custom validation

def semantic_validation(output: str, expected: str) -> bool:

# In production, use semantic similarity

# For now, simple substring check

return expected.lower() in output.lower() or output.lower() in expected.lower()

evaluator = AccuracyEvaluator()

test_cases = [

{"input": "What is AI?", "expected": "Artificial Intelligence"}

]

results = evaluator.evaluate_accuracy(agent, test_cases, validation_fn=semantic_validation)

3. Efficiency Metrics

Measure steps taken and time to complete tasks:

import time

from typing import List

class EfficiencyEvaluator:

def __init__(self):

self.step_counts = []

self.execution_times = []

self.token_usage = []

def evaluate_efficiency(self, agent, task: Dict) -> Dict:

# Measure efficiency metrics

start_time = time.time()

steps = 0

# Track agent execution

agent.set_step_callback(lambda: self._increment_steps())

try:

result = agent.execute(task["input"])

execution_time = time.time() - start_time

metrics = {

"steps_taken": steps,

"execution_time_seconds": execution_time,

"tokens_used": agent.get_tokens_used() if hasattr(agent, 'get_tokens_used') else 0,

"success": result is not None

}

self.step_counts.append(steps)

self.execution_times.append(execution_time)

return metrics

except Exception as e:

return {

"steps_taken": steps,

"execution_time_seconds": time.time() - start_time,

"error": str(e),

"success": False

}

def _increment_steps(self):

# Callback to track steps

if not hasattr(self, '_current_steps'):

self._current_steps = 0

self._current_steps += 1

def get_average_metrics(self) -> Dict:

# Calculate average efficiency metrics

if not self.step_counts:

return {}

return {

"average_steps": np.mean(self.step_counts),

"median_steps": np.median(self.step_counts),

"average_time": np.mean(self.execution_times),

"median_time": np.median(self.execution_times),

"min_steps": min(self.step_counts),

"max_steps": max(self.step_counts)

}

# Usage

efficiency = EfficiencyEvaluator()

metrics = efficiency.evaluate_efficiency(agent, {"input": "Complex task"})

average = efficiency.get_average_metrics()

4. Reliability and Consistency

Measure consistency across multiple runs:

from collections import Counter

import statistics

import time

import concurrent.futures

class ReliabilityEvaluator:

def __init__(self, num_runs: int = 10):

self.num_runs = num_runs

self.run_results = []

def evaluate_reliability(self, agent, task: Dict) -> Dict:

# Run agent multiple times and measure consistency

results = []

for run in range(self.num_runs):

try:

output = agent.execute(task["input"])

results.append({

"run": run + 1,

"output": output,

"success": output is not None

})

except Exception as e:

results.append({

"run": run + 1,

"error": str(e),

"success": False

})

# Calculate reliability metrics

success_count = sum(1 for r in results if r["success"])

success_rate = success_count / self.num_runs

# Measure output consistency

successful_outputs = [r["output"] for r in results if r["success"]]

consistency_score = self._calculate_consistency(successful_outputs)

return {

"success_rate": success_rate,

"consistency_score": consistency_score,

"num_successful": success_count,

"num_failed": self.num_runs - success_count,

"results": results

}

def _calculate_consistency(self, outputs: List[Any]) -> float:

# Calculate consistency score (0.0 to 1.0)

if not outputs:

return 0.0

if len(outputs) == 1:

return 1.0

# Count unique outputs

output_counts = Counter(str(output) for output in outputs)

most_common_count = output_counts.most_common(1)[0][1]

# Consistency = percentage of most common output

return most_common_count / len(outputs)

# Usage

reliability = ReliabilityEvaluator(num_runs=10)

reliability_results = reliability.evaluate_reliability(agent, {"input": "Test task"})

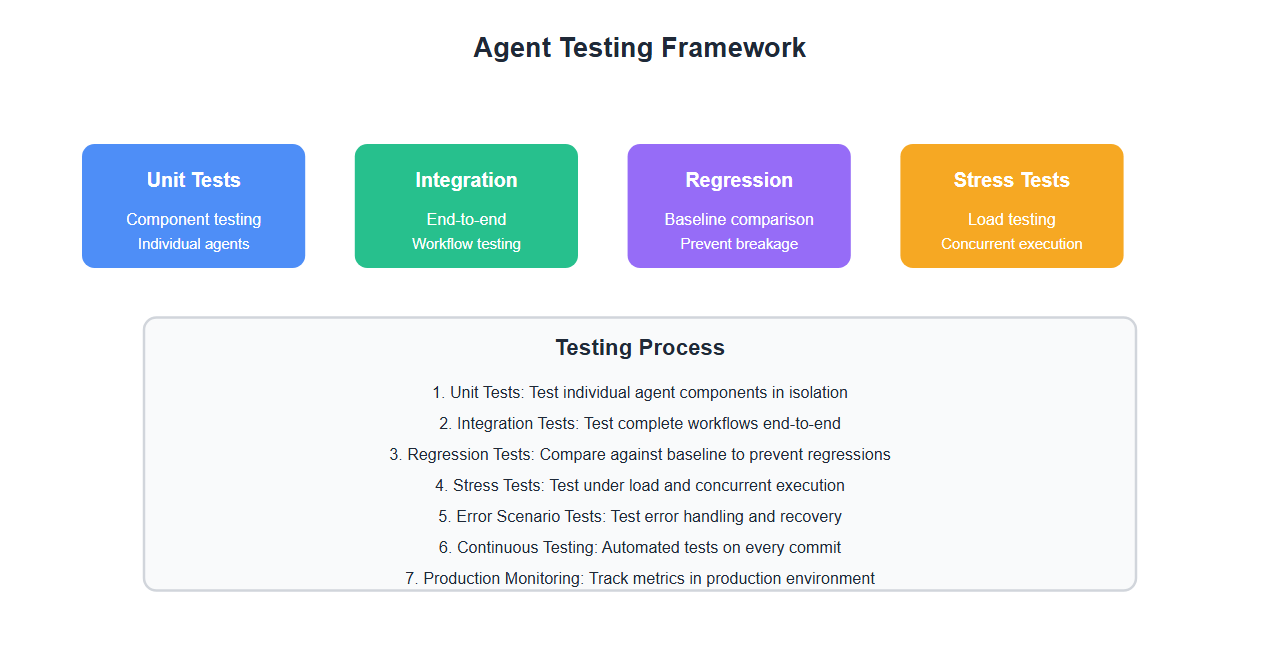

Comprehensive Testing Framework

Build a complete testing framework for agents:

class AgentTestingFramework:

def __init__(self):

self.test_suites = {}

self.evaluators = {

"completion": TaskCompletionEvaluator(),

"accuracy": AccuracyEvaluator(),

"efficiency": EfficiencyEvaluator(),

"reliability": ReliabilityEvaluator()

}

def add_test_suite(self, suite_name: str, test_cases: List[Dict]):

# Add a test suite

self.test_suites[suite_name] = test_cases

def run_all_tests(self, agent) -> Dict:

# Run all test suites

results = {

"suites": {},

"overall": {}

}

all_completion_results = []

all_accuracy_results = []

for suite_name, test_cases in self.test_suites.items():

suite_results = {

"completion": self.evaluators["completion"].evaluate_task_completion(agent, test_cases),

"accuracy": self.evaluators["accuracy"].evaluate_accuracy(agent, test_cases),

"efficiency": [self.evaluators["efficiency"].evaluate_efficiency(agent, tc) for tc in test_cases],

"reliability": [self.evaluators["reliability"].evaluate_reliability(agent, tc) for tc in test_cases[:3]] # Sample for reliability

}

results["suites"][suite_name] = suite_results

all_completion_results.append(suite_results["completion"])

all_accuracy_results.append(suite_results["accuracy"])

# Calculate overall metrics

results["overall"] = {

"average_completion_rate": np.mean([r["completion_rate"] for r in all_completion_results]),

"average_accuracy": np.mean([r["accuracy"] for r in all_accuracy_results]),

"total_test_cases": sum(len(cases) for cases in self.test_suites.values())

}

return results

def generate_report(self, results: Dict) -> str:

# Generate human-readable test report

report = "Agent Testing Report\n"

report += "=" * 50 + "\n\n"

report += f"Overall Metrics:\n"

report += f" Average Completion Rate: {results['overall']['average_completion_rate']:.2%}\n"

report += f" Average Accuracy: {results['overall']['average_accuracy']:.2%}\n"

report += f" Total Test Cases: {results['overall']['total_test_cases']}\n\n"

for suite_name, suite_results in results["suites"].items():

report += f"Test Suite: {suite_name}\n"

report += f" Completion Rate: {suite_results['completion']['completion_rate']:.2%}\n"

report += f" Accuracy: {suite_results['accuracy']['accuracy']:.2%}\n"

report += f" Average Steps: {np.mean([m['steps_taken'] for m in suite_results['efficiency']]):.1f}\n"

report += f" Average Time: {np.mean([m['execution_time_seconds'] for m in suite_results['efficiency']]):.2f}s\n\n"

return report

# Usage

framework = AgentTestingFramework()

framework.add_test_suite("basic_tasks", [

{"id": "t1", "input": "Task 1", "expected_output": "Result 1"},

{"id": "t2", "input": "Task 2", "expected_output": "Result 2"}

])

results = framework.run_all_tests(agent)

report = framework.generate_report(results)

print(report)

Testing Strategies

1. Unit Testing

Test individual agent components:

import unittest

class AgentUnitTests(unittest.TestCase):

def setUp(self):

self.agent = YourAgent() # Initialize your agent

def test_research_agent(self):

# Test research agent component

result = self.agent.research_agent({"current_task": "Test task"})

self.assertIsNotNone(result)

self.assertIn("research_results", result)

def test_analysis_agent(self):

# Test analysis agent component

state = {"research_results": {"findings": "Test findings"}}

result = self.agent.analysis_agent(state)

self.assertIn("analysis", result)

def test_state_transition(self):

# Test state transitions

initial_state = {"messages": []}

result = self.agent.process_state(initial_state)

self.assertGreater(len(result.get("messages", [])), 0)

# Run tests

if __name__ == '__main__':

unittest.main()

2. Integration Testing

Test agent workflows end-to-end:

class IntegrationTests:

def test_complete_workflow(self):

# Test complete agent workflow

agent = YourAgent()

result = agent.execute("Research and analyze market trends")

# Verify all steps completed

assert "research_results" in result

assert "analysis" in result

assert "output" in result

def test_error_recovery(self):

# Test error handling and recovery

agent = YourAgent()

# Simulate error

agent.simulate_error = True

result = agent.execute("Test task")

# Verify recovery

assert result.get("error_handled") == True

assert result.get("recovered") == True

3. Regression Testing

Ensure changes don’t break existing functionality:

class RegressionTests:

def __init__(self):

self.baseline_results = {}

self.current_results = {}

def establish_baseline(self, agent, test_suite: List[Dict]):

# Establish baseline performance

evaluator = TaskCompletionEvaluator()

self.baseline_results = evaluator.evaluate_task_completion(agent, test_suite)

def run_regression_tests(self, agent, test_suite: List[Dict]) -> Dict:

# Run tests and compare to baseline

evaluator = TaskCompletionEvaluator()

self.current_results = evaluator.evaluate_task_completion(agent, test_suite)

# Compare to baseline

regression = {

"baseline_completion": self.baseline_results["completion_rate"],

"current_completion": self.current_results["completion_rate"],

"regression": self.current_results["completion_rate"] < self.baseline_results["completion_rate"],

"improvement": self.current_results["completion_rate"] > self.baseline_results["completion_rate"]

}

return regression

4. Stress Testing

Test agent under load:

import concurrent.futures

import time

class StressTests:

def test_concurrent_execution(self, agent, num_concurrent: int = 10):

# Test agent with concurrent requests

tasks = [{"input": f"Task {i}"} for i in range(num_concurrent)]

start_time = time.time()

with concurrent.futures.ThreadPoolExecutor(max_workers=num_concurrent) as executor:

futures = [executor.submit(agent.execute, task["input"]) for task in tasks]

results = [f.result() for f in concurrent.futures.as_completed(futures)]

execution_time = time.time() - start_time

return {

"num_tasks": num_concurrent,

"execution_time": execution_time,

"throughput": num_concurrent / execution_time,

"success_rate": sum(1 for r in results if r is not None) / len(results)

}

def test_long_running_tasks(self, agent, duration_seconds: int = 60):

# Test agent with long-running tasks

start_time = time.time()

tasks_completed = 0

while time.time() - start_time < duration_seconds:

try:

agent.execute("Long running task")

tasks_completed += 1

except Exception as e:

# Track errors

pass

return {

"duration": duration_seconds,

"tasks_completed": tasks_completed,

"tasks_per_second": tasks_completed / duration_seconds

}

Best Practices: Lessons from 10+ Implementations

From developing evaluation frameworks:

- Comprehensive test suites: Cover all agent capabilities. Include edge cases and error scenarios.

- Real-world scenarios: Test on actual use cases. Synthetic tests miss real issues.

- Continuous evaluation: Monitor performance in production. Set up automated testing.

- Human evaluation: Include human judgment for complex tasks. Automated metrics aren't always sufficient.

- Baseline establishment: Establish baselines before changes. Compare against baselines.

- Multiple metrics: Use multiple metrics. Single metrics miss important aspects.

- Regression testing: Test that changes don't break existing functionality. Automate regression tests.

- Stress testing: Test under load. Production conditions differ from development.

- Error scenario testing: Test error handling. Agents must handle failures gracefully.

- Performance benchmarking: Benchmark performance regularly. Track improvements and regressions.

- Documentation: Document test cases and results. Enables reproducibility.

- Automation: Automate testing. Manual testing doesn't scale.

Common Mistakes and How to Avoid Them

What I learned the hard way:

- Testing only happy paths: Test error scenarios. Real systems face errors constantly.

- Ignoring non-determinism: Run tests multiple times. Non-deterministic behavior requires multiple runs.

- No baseline: Establish baselines. Can't measure improvement without baselines.

- Single metric focus: Use multiple metrics. Single metrics miss important aspects.

- No production monitoring: Monitor in production. Development tests miss production issues.

- Insufficient test coverage: Cover all capabilities. Gaps in coverage lead to production failures.

- No regression testing: Test that changes don't break things. Changes introduce regressions.

- Ignoring efficiency: Measure efficiency. Slow agents are unusable.

- No human evaluation: Include human judgment. Automated metrics have limitations.

- Poor test data: Use realistic test data. Synthetic data misses real patterns.

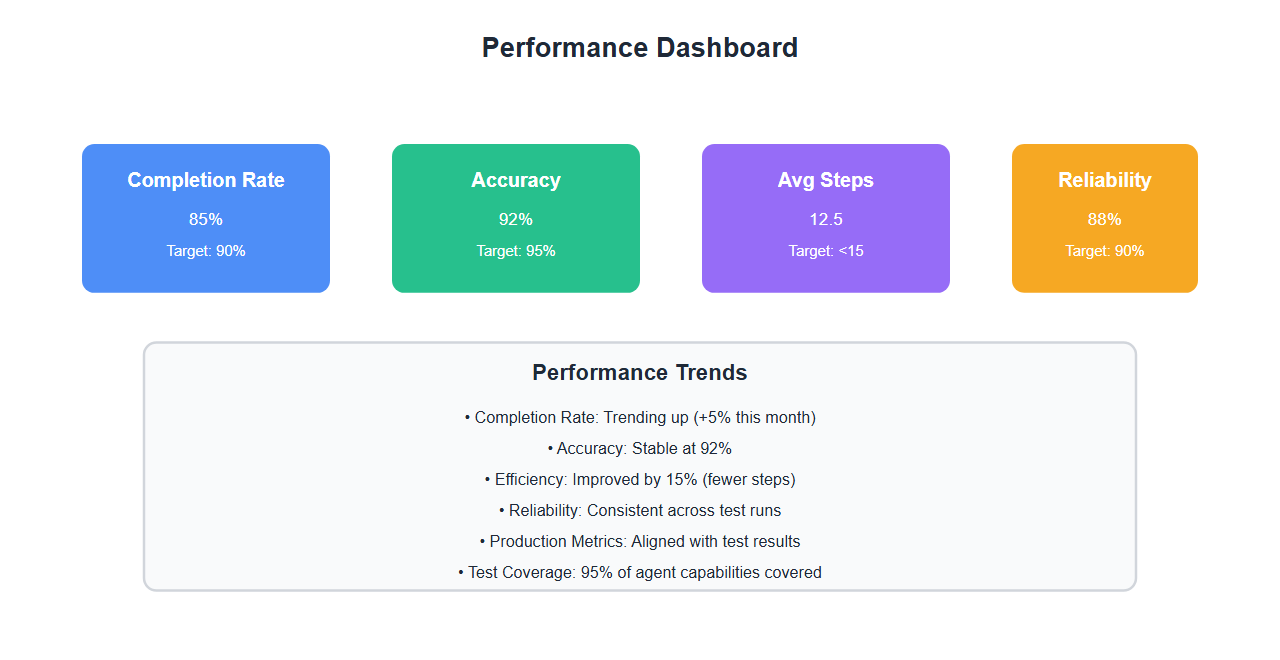

Real-World Example: Research Agent Evaluation

We built a comprehensive evaluation framework for our research agent:

- Test suite: 200+ test cases covering research, analysis, and writing tasks

- Metrics: Completion rate, accuracy, efficiency, reliability

- Baseline: Established baseline with initial version

- Continuous testing: Automated tests run on every commit

- Production monitoring: Track metrics in production

Results: Caught 40% more issues before production, improved success rate by 35%, reduced production incidents by 60%.

🎯 Key Takeaway

Agent evaluation requires comprehensive metrics and testing. Measure task completion, accuracy, efficiency, and reliability. Use real-world scenarios, establish baselines, and monitor continuously. With proper evaluation, you ensure agent quality and catch issues before production.

Bottom Line

Agent evaluation requires comprehensive metrics and testing strategies. Measure task completion, accuracy, efficiency, and reliability. Use real-world scenarios, establish baselines, and monitor continuously. With proper evaluation, you ensure agent quality and catch issues before they reach production.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.