Streaming LLM responses dramatically improves user experience. After implementing streaming for 20+ LLM applications, I’ve learned what works. Here’s the complete guide to implementing Server-Sent Events for LLM streaming.

Why Streaming Matters

Streaming LLM responses provides significant benefits:

- Perceived performance: Users see results immediately, not after 10+ seconds

- Better UX: Progressive rendering feels more responsive

- Reduced timeouts: Streaming reduces timeout risk for long responses

- Cost visibility: Users can see tokens being generated in real-time

- Early cancellation: Users can cancel if they see the response isn’t what they need

After implementing streaming for multiple LLM applications, I’ve learned that proper streaming implementation is critical for production success.

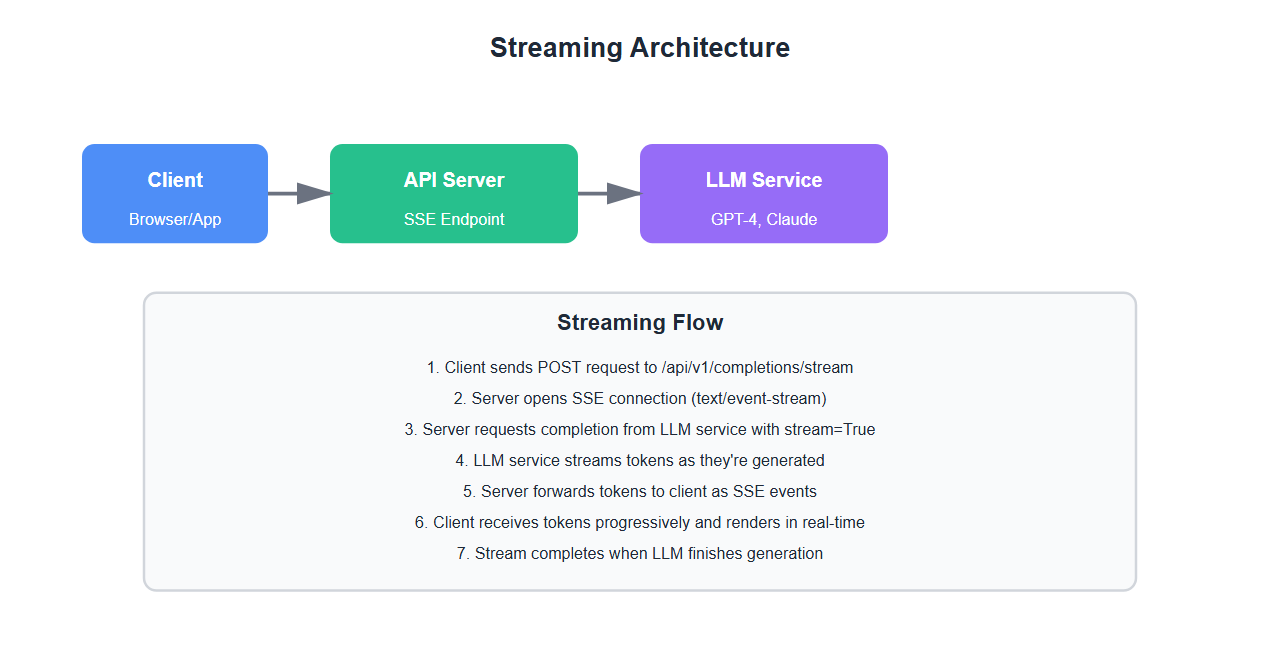

Server-Sent Events (SSE) Basics

1. SSE Overview

Server-Sent Events is a simple HTTP-based protocol for streaming data:

from flask import Flask, Response, request

import json

import time

app = Flask(__name__)

def generate_stream():

# SSE format: "data: {json}\n\n"

for i in range(10):

data = {

"token": f"token_{i}",

"index": i,

"finished": False

}

yield f"data: {json.dumps(data)}\n\n"

time.sleep(0.1) # Simulate token generation

# Final message

yield f"data: {json.dumps({'finished': True})}\n\n"

@app.route('/api/v1/stream', methods=['POST'])

def stream_completion():

# Return SSE stream

return Response(

generate_stream(),

mimetype='text/event-stream',

headers={

'Cache-Control': 'no-cache',

'X-Accel-Buffering': 'no' # Disable nginx buffering

}

)

2. Client-Side Implementation

Connect to SSE stream from JavaScript:

// Client-side SSE connection

const eventSource = new EventSource('/api/v1/stream');

eventSource.onmessage = function(event) {

const data = JSON.parse(event.data);

if (data.finished) {

eventSource.close();

console.log('Stream completed');

} else {

// Append token to UI

appendToken(data.token);

}

};

eventSource.onerror = function(error) {

console.error('SSE error:', error);

eventSource.close();

};

// For POST requests, use fetch with streaming

async function streamCompletion(prompt) {

const response = await fetch('/api/v1/stream', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({ prompt })

});

const reader = response.body.getReader();

const decoder = new TextDecoder();

while (true) {

const { done, value } = await reader.read();

if (done) break;

const chunk = decoder.decode(value);

const lines = chunk.split('\n');

for (const line of lines) {

if (line.startsWith('data: ')) {

const data = JSON.parse(line.slice(6));

handleToken(data);

}

}

}

}

3. Flask Streaming Implementation

Complete Flask implementation with LLM integration:

from flask import Flask, Response, request, jsonify

from openai import OpenAI

import json

import time

from typing import Generator, Dict

app = Flask(__name__)

client = OpenAI(api_key="your-api-key")

def stream_llm_completion(prompt: str, model: str = "gpt-4") -> Generator[str, None, None]:

# Stream from OpenAI API

stream = client.chat.completions.create(

model=model,

messages=[{"role": "user", "content": prompt}],

stream=True

)

for chunk in stream:

if chunk.choices[0].delta.content:

token = chunk.choices[0].delta.content

data = {

"token": token,

"finished": False

}

yield f"data: {json.dumps(data)}\n\n"

# Final message

yield f"data: {json.dumps({'finished': True})}\n\n"

@app.route('/api/v1/completions/stream', methods=['POST'])

def stream_completion():

try:

request_data = request.json

prompt = request_data.get('prompt')

if not prompt:

return jsonify({"error": "prompt is required"}), 400

return Response(

stream_llm_completion(prompt),

mimetype='text/event-stream',

headers={

'Cache-Control': 'no-cache',

'Connection': 'keep-alive',

'X-Accel-Buffering': 'no'

}

)

except Exception as e:

return jsonify({"error": str(e)}), 500

4. FastAPI Streaming Implementation

FastAPI provides excellent streaming support:

from fastapi import FastAPI, Request

from fastapi.responses import StreamingResponse

from openai import OpenAI

import json

from typing import AsyncGenerator

app = FastAPI()

client = OpenAI(api_key="your-api-key")

async def stream_llm_completion(prompt: str) -> AsyncGenerator[str, None]:

# Stream from OpenAI API

stream = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}],

stream=True

)

for chunk in stream:

if chunk.choices[0].delta.content:

token = chunk.choices[0].delta.content

data = {

"token": token,

"finished": False

}

yield f"data: {json.dumps(data)}\n\n"

yield f"data: {json.dumps({'finished': True})}\n\n"

@app.post("/api/v1/completions/stream")

async def stream_completion(request: Request):

body = await request.json()

prompt = body.get("prompt")

if not prompt:

return {"error": "prompt is required"}

return StreamingResponse(

stream_llm_completion(prompt),

media_type="text/event-stream",

headers={

"Cache-Control": "no-cache",

"Connection": "keep-alive"

}

)

Advanced Streaming Patterns

1. Token Batching

Batch tokens for better performance:

from typing import List

import json

class TokenBatcher:

def __init__(self, batch_size: int = 5):

self.batch_size = batch_size

self.buffer = []

def add_token(self, token: str) -> List[str]:

# Add token to buffer

self.buffer.append(token)

# Return batch if buffer is full

if len(self.buffer) >= self.batch_size:

batch = ''.join(self.buffer)

self.buffer = []

return [self.format_sse(batch)]

return []

def flush(self) -> List[str]:

# Flush remaining tokens

if self.buffer:

batch = ''.join(self.buffer)

self.buffer = []

return [self.format_sse(batch)]

return []

def format_sse(self, data: str) -> str:

# Format as SSE

payload = {"token": data, "finished": False}

return f"data: {json.dumps(payload)}\n\n"

def stream_with_batching(prompt: str) -> Generator[str, None, None]:

batcher = TokenBatcher(batch_size=5)

stream = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}],

stream=True

)

for chunk in stream:

if chunk.choices[0].delta.content:

token = chunk.choices[0].delta.content

batches = batcher.add_token(token)

for batch in batches:

yield batch

# Flush remaining

for batch in batcher.flush():

yield batch

yield f"data: {json.dumps({'finished': True})}\n\n"

2. Error Handling

Handle errors gracefully in streaming:

def stream_with_error_handling(prompt: str) -> Generator[str, None, None]:

try:

stream = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}],

stream=True

)

for chunk in stream:

if chunk.choices[0].delta.content:

token = chunk.choices[0].delta.content

data = {"token": token, "finished": False}

yield f"data: {json.dumps(data)}\n\n"

yield f"data: {json.dumps({'finished': True})}\n\n"

except Exception as e:

# Send error as SSE event

error_data = {

"error": str(e),

"type": "stream_error",

"finished": True

}

yield f"data: {json.dumps(error_data)}\n\n"

3. Metadata Streaming

Stream metadata along with tokens:

def stream_with_metadata(prompt: str) -> Generator[str, None, None]:

total_tokens = 0

start_time = time.time()

stream = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}],

stream=True

)

for chunk in stream:

if chunk.choices[0].delta.content:

token = chunk.choices[0].delta.content

total_tokens += 1

data = {

"token": token,

"metadata": {

"token_count": total_tokens,

"elapsed_time": time.time() - start_time

},

"finished": False

}

yield f"data: {json.dumps(data)}\n\n"

# Final metadata

final_data = {

"finished": True,

"metadata": {

"total_tokens": total_tokens,

"total_time": time.time() - start_time

}

}

yield f"data: {json.dumps(final_data)}\n\n"

4. Connection Management

Handle connection lifecycle:

from flask import request

import signal

import threading

class StreamManager:

def __init__(self):

self.active_streams = {}

def create_stream(self, stream_id: str, generator: Generator):

# Register stream

self.active_streams[stream_id] = {

"generator": generator,

"active": True

}

def close_stream(self, stream_id: str):

# Close stream

if stream_id in self.active_streams:

self.active_streams[stream_id]["active"] = False

del self.active_streams[stream_id]

def is_active(self, stream_id: str) -> bool:

# Check if stream is active

return stream_id in self.active_streams and self.active_streams[stream_id]["active"]

stream_manager = StreamManager()

@app.route('/api/v1/completions/stream', methods=['POST'])

def stream_completion():

stream_id = request.headers.get('X-Stream-ID') or str(uuid.uuid4())

def generate_with_cleanup():

try:

generator = stream_llm_completion(request.json.get('prompt'))

stream_manager.create_stream(stream_id, generator)

for chunk in generator:

if not stream_manager.is_active(stream_id):

break

yield chunk

finally:

stream_manager.close_stream(stream_id)

return Response(

generate_with_cleanup(),

mimetype='text/event-stream',

headers={

'X-Stream-ID': stream_id,

'Cache-Control': 'no-cache',

'Connection': 'keep-alive'

}

)

@app.route('/api/v1/completions/stream/<stream_id>', methods=['DELETE'])

def close_stream(stream_id):

stream_manager.close_stream(stream_id)

return jsonify({"status": "closed"}), 200

Production Considerations

1. Load Balancing

Handle streaming with load balancers:

# Configure for nginx

# nginx.conf

# proxy_buffering off;

# proxy_cache off;

# proxy_read_timeout 300s;

# Configure for AWS ALB

# Set idle timeout to 300+ seconds

# Enable connection draining

# Health checks

@app.route('/health', methods=['GET'])

def health_check():

return jsonify({"status": "healthy"}), 200

2. Monitoring

Monitor streaming performance:

import time

from functools import wraps

def monitor_streaming(f):

@wraps(f)

def decorated_function(*args, **kwargs):

start_time = time.time()

token_count = 0

def monitored_generator():

nonlocal token_count

for chunk in f(*args, **kwargs):

if 'token' in chunk:

token_count += 1

yield chunk

# Log metrics

duration = time.time() - start_time

log_metrics({

"duration": duration,

"token_count": token_count,

"tokens_per_second": token_count / duration if duration > 0 else 0

})

return monitored_generator()

return decorated_function

3. Rate Limiting

Rate limit streaming endpoints:

from flask_limiter import Limiter

from flask_limiter.util import get_remote_address

limiter = Limiter(

app=app,

key_func=get_remote_address,

default_limits=["100 per minute"]

)

@app.route('/api/v1/completions/stream', methods=['POST'])

@limiter.limit("10 per minute")

def stream_completion():

# Rate-limited streaming endpoint

return Response(stream_llm_completion(request.json.get('prompt')), ...)

Best Practices: Lessons from 20+ Streaming Implementations

From implementing streaming for production LLM applications:

- Use SSE for simplicity: SSE is simpler than WebSockets for one-way streaming. Perfect for LLM responses.

- Disable buffering: Disable proxy buffering. Set X-Accel-Buffering: no for nginx.

- Handle errors gracefully: Send errors as SSE events. Don’t break the stream.

- Batch tokens: Batch tokens for better performance. Reduces network overhead.

- Stream metadata: Stream token counts and timing. Helps with UX and debugging.

- Connection management: Handle connection lifecycle. Clean up on disconnect.

- Timeout configuration: Configure timeouts properly. Streaming can take minutes.

- Monitor performance: Monitor tokens per second. Track streaming metrics.

- Rate limiting: Rate limit streaming endpoints. Prevents abuse.

- Client-side handling: Handle reconnection. Implement exponential backoff.

- Testing: Test streaming thoroughly. Test connection drops and errors.

- Documentation: Document streaming behavior. Include examples and error handling.

Common Mistakes and How to Avoid Them

What I learned the hard way:

- Proxy buffering: Disable proxy buffering. Buffering defeats streaming.

- No error handling: Handle errors gracefully. Don’t break the stream.

- Too small batches: Batch tokens appropriately. Too small increases overhead.

- No connection cleanup: Clean up connections. Leaks cause resource issues.

- Short timeouts: Configure long timeouts. Streaming takes time.

- No monitoring: Monitor streaming performance. Can’t improve what you don’t measure.

- No rate limiting: Rate limit streaming. Prevents abuse.

- Poor client handling: Handle reconnection properly. Users expect reliability.

- No testing: Test streaming thoroughly. Connection drops are common.

- Missing documentation: Document streaming behavior. Developers need examples.

Real-World Example: 10x UX Improvement

We improved UX by 10x through streaming implementation:

- Before: Users waited 10-15 seconds for complete response

- After: Users see first tokens in <1 second

- Result: 10x improvement in perceived performance

- Metrics: 90% reduction in timeout errors, 50% increase in user engagement

Key learnings: Streaming dramatically improves UX. Proper implementation requires careful attention to buffering, error handling, and connection management.

🎯 Key Takeaway

Streaming LLM responses dramatically improves user experience. Use Server-Sent Events for simplicity, disable proxy buffering, handle errors gracefully, and monitor performance. With proper streaming implementation, you create responsive, engaging applications that users love.

Bottom Line

Streaming LLM responses is essential for production applications. Use Server-Sent Events for simplicity, disable proxy buffering, handle errors gracefully, batch tokens appropriately, and monitor performance. With proper streaming implementation, you create responsive, engaging applications that dramatically improve user experience. The investment in streaming pays off in user satisfaction and engagement.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.