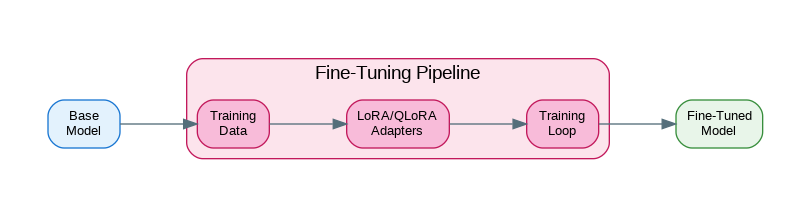

Introduction: Fine-tuning transforms general-purpose LLMs into specialized models that excel at your specific tasks. While prompting can get you far, fine-tuning unlocks capabilities that prompting alone cannot achieve: consistent output formats, domain-specific knowledge, reduced latency from shorter prompts, and behavior that would require extensive few-shot examples. This guide covers the practical aspects of LLM fine-tuning: preparing training data, choosing between full fine-tuning and parameter-efficient methods like LoRA and QLoRA, implementing training loops with proper evaluation, and deploying fine-tuned models. Whether you’re building a customer service bot that matches your brand voice or a code assistant trained on your codebase, these patterns will help you create models that truly fit your needs.

Training Data Preparation

from dataclasses import dataclass, field

from typing import Any, Optional

from abc import ABC, abstractmethod

import json

@dataclass

class TrainingExample:

"""A single training example."""

instruction: str

input: str = ""

output: str = ""

system: str = ""

def to_alpaca_format(self) -> dict:

"""Convert to Alpaca format."""

return {

"instruction": self.instruction,

"input": self.input,

"output": self.output

}

def to_chat_format(self) -> dict:

"""Convert to chat format."""

messages = []

if self.system:

messages.append({"role": "system", "content": self.system})

user_content = self.instruction

if self.input:

user_content += f"\n\n{self.input}"

messages.append({"role": "user", "content": user_content})

messages.append({"role": "assistant", "content": self.output})

return {"messages": messages}

def to_completion_format(self, template: str = None) -> dict:

"""Convert to completion format."""

if template:

prompt = template.format(

instruction=self.instruction,

input=self.input

)

else:

prompt = f"### Instruction:\n{self.instruction}"

if self.input:

prompt += f"\n\n### Input:\n{self.input}"

prompt += "\n\n### Response:\n"

return {

"prompt": prompt,

"completion": self.output

}

class DatasetFormatter:

"""Format datasets for fine-tuning."""

def __init__(self, format_type: str = "chat"):

self.format_type = format_type

def format_examples(

self,

examples: list[TrainingExample]

) -> list[dict]:

"""Format all examples."""

if self.format_type == "alpaca":

return [e.to_alpaca_format() for e in examples]

elif self.format_type == "chat":

return [e.to_chat_format() for e in examples]

elif self.format_type == "completion":

return [e.to_completion_format() for e in examples]

raise ValueError(f"Unknown format: {self.format_type}")

def save_jsonl(self, examples: list[dict], path: str):

"""Save as JSONL file."""

with open(path, 'w') as f:

for example in examples:

f.write(json.dumps(example) + '\n')

def load_jsonl(self, path: str) -> list[dict]:

"""Load from JSONL file."""

examples = []

with open(path, 'r') as f:

for line in f:

examples.append(json.loads(line))

return examples

class DataAugmenter:

"""Augment training data."""

def __init__(self, llm_client: Any = None):

self.llm = llm_client

async def paraphrase(self, example: TrainingExample) -> list[TrainingExample]:

"""Generate paraphrased versions."""

if not self.llm:

return [example]

prompt = f"""Paraphrase this instruction in 3 different ways while keeping the same meaning.

Original: {example.instruction}

Paraphrases (one per line):"""

response = await self.llm.complete(prompt)

paraphrases = [

line.strip().lstrip('0123456789.-) ')

for line in response.content.split('\n')

if line.strip()

][:3]

return [

TrainingExample(

instruction=p,

input=example.input,

output=example.output,

system=example.system

)

for p in paraphrases

]

async def generate_variations(

self,

example: TrainingExample,

num_variations: int = 3

) -> list[TrainingExample]:

"""Generate input/output variations."""

if not self.llm:

return [example]

prompt = f"""Generate {num_variations} similar examples with different inputs and outputs.

Original:

Instruction: {example.instruction}

Input: {example.input}

Output: {example.output}

Generate variations in JSON format:"""

response = await self.llm.complete(prompt)

# Parse variations (simplified)

return [example] # Would parse JSON response

class DataValidator:

"""Validate training data quality."""

def __init__(self):

self.issues: list[dict] = []

def validate(self, examples: list[TrainingExample]) -> dict:

"""Validate dataset."""

self.issues = []

for i, example in enumerate(examples):

# Check for empty fields

if not example.instruction.strip():

self.issues.append({

"index": i,

"issue": "empty_instruction",

"severity": "error"

})

if not example.output.strip():

self.issues.append({

"index": i,

"issue": "empty_output",

"severity": "error"

})

# Check for very short outputs

if len(example.output.split()) < 3:

self.issues.append({

"index": i,

"issue": "short_output",

"severity": "warning"

})

# Check for duplicate instructions

for j, other in enumerate(examples[:i]):

if example.instruction == other.instruction:

self.issues.append({

"index": i,

"issue": "duplicate_instruction",

"duplicate_of": j,

"severity": "warning"

})

errors = [i for i in self.issues if i["severity"] == "error"]

warnings = [i for i in self.issues if i["severity"] == "warning"]

return {

"valid": len(errors) == 0,

"total_examples": len(examples),

"errors": len(errors),

"warnings": len(warnings),

"issues": self.issues

}

class DatasetSplitter:

"""Split dataset into train/val/test."""

def __init__(

self,

train_ratio: float = 0.8,

val_ratio: float = 0.1,

test_ratio: float = 0.1,

seed: int = 42

):

self.train_ratio = train_ratio

self.val_ratio = val_ratio

self.test_ratio = test_ratio

self.seed = seed

def split(

self,

examples: list[TrainingExample]

) -> tuple[list, list, list]:

"""Split into train/val/test."""

import random

random.seed(self.seed)

shuffled = examples.copy()

random.shuffle(shuffled)

n = len(shuffled)

train_end = int(n * self.train_ratio)

val_end = train_end + int(n * self.val_ratio)

return (

shuffled[:train_end],

shuffled[train_end:val_end],

shuffled[val_end:]

)LoRA and QLoRA Fine-Tuning

from dataclasses import dataclass

from typing import Any, Optional

import torch

@dataclass

class LoRAConfig:

"""LoRA configuration."""

r: int = 8 # Rank

lora_alpha: int = 16 # Scaling factor

lora_dropout: float = 0.05

target_modules: list[str] = None # Modules to apply LoRA

bias: str = "none" # "none", "all", "lora_only"

task_type: str = "CAUSAL_LM"

def __post_init__(self):

if self.target_modules is None:

# Default for most models

self.target_modules = ["q_proj", "v_proj", "k_proj", "o_proj"]

@dataclass

class QLoRAConfig(LoRAConfig):

"""QLoRA configuration (LoRA + quantization)."""

load_in_4bit: bool = True

bnb_4bit_compute_dtype: str = "float16"

bnb_4bit_quant_type: str = "nf4"

bnb_4bit_use_double_quant: bool = True

class LoRATrainer:

"""Train models with LoRA."""

def __init__(

self,

model_name: str,

config: LoRAConfig,

output_dir: str

):

self.model_name = model_name

self.config = config

self.output_dir = output_dir

self.model = None

self.tokenizer = None

def load_model(self):

"""Load base model with LoRA."""

from transformers import AutoModelForCausalLM, AutoTokenizer

from peft import get_peft_model, LoraConfig, TaskType

# Load tokenizer

self.tokenizer = AutoTokenizer.from_pretrained(self.model_name)

if self.tokenizer.pad_token is None:

self.tokenizer.pad_token = self.tokenizer.eos_token

# Load model

self.model = AutoModelForCausalLM.from_pretrained(

self.model_name,

torch_dtype=torch.float16,

device_map="auto"

)

# Apply LoRA

peft_config = LoraConfig(

r=self.config.r,

lora_alpha=self.config.lora_alpha,

lora_dropout=self.config.lora_dropout,

target_modules=self.config.target_modules,

bias=self.config.bias,

task_type=TaskType.CAUSAL_LM

)

self.model = get_peft_model(self.model, peft_config)

self.model.print_trainable_parameters()

def prepare_dataset(self, examples: list[dict]) -> Any:

"""Prepare dataset for training."""

from datasets import Dataset

def tokenize(example):

# Format as chat

if "messages" in example:

text = self.tokenizer.apply_chat_template(

example["messages"],

tokenize=False

)

else:

text = example.get("text", "")

return self.tokenizer(

text,

truncation=True,

max_length=2048,

padding="max_length"

)

dataset = Dataset.from_list(examples)

return dataset.map(tokenize, remove_columns=dataset.column_names)

def train(

self,

train_dataset: Any,

eval_dataset: Any = None,

num_epochs: int = 3,

batch_size: int = 4,

learning_rate: float = 2e-4,

gradient_accumulation_steps: int = 4

):

"""Train the model."""

from transformers import TrainingArguments, Trainer, DataCollatorForLanguageModeling

training_args = TrainingArguments(

output_dir=self.output_dir,

num_train_epochs=num_epochs,

per_device_train_batch_size=batch_size,

per_device_eval_batch_size=batch_size,

gradient_accumulation_steps=gradient_accumulation_steps,

learning_rate=learning_rate,

weight_decay=0.01,

warmup_ratio=0.03,

lr_scheduler_type="cosine",

logging_steps=10,

save_strategy="epoch",

evaluation_strategy="epoch" if eval_dataset else "no",

fp16=True,

report_to="tensorboard"

)

data_collator = DataCollatorForLanguageModeling(

tokenizer=self.tokenizer,

mlm=False

)

trainer = Trainer(

model=self.model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

data_collator=data_collator

)

trainer.train()

# Save LoRA weights

self.model.save_pretrained(self.output_dir)

self.tokenizer.save_pretrained(self.output_dir)

def merge_and_save(self, output_path: str):

"""Merge LoRA weights into base model."""

merged_model = self.model.merge_and_unload()

merged_model.save_pretrained(output_path)

self.tokenizer.save_pretrained(output_path)

class QLoRATrainer(LoRATrainer):

"""Train with QLoRA (4-bit quantization + LoRA)."""

def __init__(

self,

model_name: str,

config: QLoRAConfig,

output_dir: str

):

super().__init__(model_name, config, output_dir)

self.qlora_config = config

def load_model(self):

"""Load quantized model with LoRA."""

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

from peft import get_peft_model, LoraConfig, TaskType, prepare_model_for_kbit_training

# Load tokenizer

self.tokenizer = AutoTokenizer.from_pretrained(self.model_name)

if self.tokenizer.pad_token is None:

self.tokenizer.pad_token = self.tokenizer.eos_token

# Quantization config

bnb_config = BitsAndBytesConfig(

load_in_4bit=self.qlora_config.load_in_4bit,

bnb_4bit_compute_dtype=getattr(torch, self.qlora_config.bnb_4bit_compute_dtype),

bnb_4bit_quant_type=self.qlora_config.bnb_4bit_quant_type,

bnb_4bit_use_double_quant=self.qlora_config.bnb_4bit_use_double_quant

)

# Load quantized model

self.model = AutoModelForCausalLM.from_pretrained(

self.model_name,

quantization_config=bnb_config,

device_map="auto"

)

# Prepare for k-bit training

self.model = prepare_model_for_kbit_training(self.model)

# Apply LoRA

peft_config = LoraConfig(

r=self.config.r,

lora_alpha=self.config.lora_alpha,

lora_dropout=self.config.lora_dropout,

target_modules=self.config.target_modules,

bias=self.config.bias,

task_type=TaskType.CAUSAL_LM

)

self.model = get_peft_model(self.model, peft_config)

self.model.print_trainable_parameters()Full Fine-Tuning

from dataclasses import dataclass

from typing import Any, Optional

import torch

@dataclass

class FullFinetuneConfig:

"""Full fine-tuning configuration."""

learning_rate: float = 2e-5

num_epochs: int = 3

batch_size: int = 4

gradient_accumulation_steps: int = 8

warmup_ratio: float = 0.03

weight_decay: float = 0.01

max_grad_norm: float = 1.0

fp16: bool = True

gradient_checkpointing: bool = True

class FullFinetuner:

"""Full model fine-tuning."""

def __init__(

self,

model_name: str,

config: FullFinetuneConfig,

output_dir: str

):

self.model_name = model_name

self.config = config

self.output_dir = output_dir

self.model = None

self.tokenizer = None

def load_model(self):

"""Load model for full fine-tuning."""

from transformers import AutoModelForCausalLM, AutoTokenizer

self.tokenizer = AutoTokenizer.from_pretrained(self.model_name)

if self.tokenizer.pad_token is None:

self.tokenizer.pad_token = self.tokenizer.eos_token

self.model = AutoModelForCausalLM.from_pretrained(

self.model_name,

torch_dtype=torch.float16,

device_map="auto"

)

if self.config.gradient_checkpointing:

self.model.gradient_checkpointing_enable()

def train(self, train_dataset: Any, eval_dataset: Any = None):

"""Train with full fine-tuning."""

from transformers import TrainingArguments, Trainer, DataCollatorForLanguageModeling

training_args = TrainingArguments(

output_dir=self.output_dir,

num_train_epochs=self.config.num_epochs,

per_device_train_batch_size=self.config.batch_size,

per_device_eval_batch_size=self.config.batch_size,

gradient_accumulation_steps=self.config.gradient_accumulation_steps,

learning_rate=self.config.learning_rate,

weight_decay=self.config.weight_decay,

warmup_ratio=self.config.warmup_ratio,

max_grad_norm=self.config.max_grad_norm,

fp16=self.config.fp16,

logging_steps=10,

save_strategy="epoch",

evaluation_strategy="epoch" if eval_dataset else "no",

gradient_checkpointing=self.config.gradient_checkpointing,

report_to="tensorboard"

)

data_collator = DataCollatorForLanguageModeling(

tokenizer=self.tokenizer,

mlm=False

)

trainer = Trainer(

model=self.model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

data_collator=data_collator

)

trainer.train()

self.model.save_pretrained(self.output_dir)

self.tokenizer.save_pretrained(self.output_dir)

class DeepSpeedTrainer:

"""Train with DeepSpeed for large models."""

def __init__(

self,

model_name: str,

output_dir: str,

deepspeed_config: dict = None

):

self.model_name = model_name

self.output_dir = output_dir

self.ds_config = deepspeed_config or self._default_config()

def _default_config(self) -> dict:

"""Default DeepSpeed ZeRO-3 config."""

return {

"bf16": {"enabled": True},

"zero_optimization": {

"stage": 3,

"offload_optimizer": {"device": "cpu"},

"offload_param": {"device": "cpu"},

"overlap_comm": True,

"contiguous_gradients": True,

"reduce_bucket_size": "auto",

"stage3_prefetch_bucket_size": "auto",

"stage3_param_persistence_threshold": "auto"

},

"gradient_accumulation_steps": 8,

"gradient_clipping": 1.0,

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": 1

}

def train(self, train_dataset: Any, eval_dataset: Any = None):

"""Train with DeepSpeed."""

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers import TrainingArguments, Trainer, DataCollatorForLanguageModeling

tokenizer = AutoTokenizer.from_pretrained(self.model_name)

if tokenizer.pad_token is None:

tokenizer.pad_token = tokenizer.eos_token

model = AutoModelForCausalLM.from_pretrained(self.model_name)

training_args = TrainingArguments(

output_dir=self.output_dir,

num_train_epochs=3,

per_device_train_batch_size=1,

gradient_accumulation_steps=8,

learning_rate=2e-5,

bf16=True,

logging_steps=10,

save_strategy="epoch",

deepspeed=self.ds_config,

report_to="tensorboard"

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

data_collator=DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

)

trainer.train()Instruction Tuning

from dataclasses import dataclass, field

from typing import Any, Optional

@dataclass

class InstructionTuningConfig:

"""Configuration for instruction tuning."""

model_name: str

output_dir: str

use_lora: bool = True

lora_r: int = 16

lora_alpha: int = 32

max_length: int = 2048

num_epochs: int = 3

batch_size: int = 4

learning_rate: float = 2e-4

class InstructionTuner:

"""Fine-tune for instruction following."""

def __init__(self, config: InstructionTuningConfig):

self.config = config

self.model = None

self.tokenizer = None

def prepare_data(self, examples: list[TrainingExample]) -> Any:

"""Prepare instruction-following data."""

from datasets import Dataset

formatted = []

for ex in examples:

# Format as instruction-response

if ex.system:

text = f"<|system|>\n{ex.system}\n"

else:

text = ""

text += f"<|user|>\n{ex.instruction}"

if ex.input:

text += f"\n{ex.input}"

text += f"\n<|assistant|>\n{ex.output}"

formatted.append({"text": text})

return Dataset.from_list(formatted)

def train(self, train_data: list[TrainingExample], eval_data: list[TrainingExample] = None):

"""Train instruction-following model."""

from transformers import AutoModelForCausalLM, AutoTokenizer

from trl import SFTTrainer, SFTConfig

# Load model and tokenizer

self.tokenizer = AutoTokenizer.from_pretrained(self.config.model_name)

if self.tokenizer.pad_token is None:

self.tokenizer.pad_token = self.tokenizer.eos_token

self.model = AutoModelForCausalLM.from_pretrained(

self.config.model_name,

torch_dtype=torch.float16,

device_map="auto"

)

# Prepare datasets

train_dataset = self.prepare_data(train_data)

eval_dataset = self.prepare_data(eval_data) if eval_data else None

# LoRA config if enabled

peft_config = None

if self.config.use_lora:

from peft import LoraConfig

peft_config = LoraConfig(

r=self.config.lora_r,

lora_alpha=self.config.lora_alpha,

target_modules=["q_proj", "v_proj", "k_proj", "o_proj"],

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM"

)

# Training config

sft_config = SFTConfig(

output_dir=self.config.output_dir,

num_train_epochs=self.config.num_epochs,

per_device_train_batch_size=self.config.batch_size,

learning_rate=self.config.learning_rate,

max_seq_length=self.config.max_length,

logging_steps=10,

save_strategy="epoch"

)

# Train

trainer = SFTTrainer(

model=self.model,

args=sft_config,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

tokenizer=self.tokenizer,

peft_config=peft_config,

dataset_text_field="text"

)

trainer.train()

trainer.save_model()

class ChatTuner:

"""Fine-tune for chat/conversation."""

def __init__(self, config: InstructionTuningConfig):

self.config = config

def prepare_conversations(self, conversations: list[list[dict]]) -> Any:

"""Prepare multi-turn conversation data."""

from datasets import Dataset

formatted = []

for conv in conversations:

# conv is list of {"role": "user/assistant", "content": "..."}

formatted.append({"messages": conv})

return Dataset.from_list(formatted)

def train(self, conversations: list[list[dict]]):

"""Train chat model."""

from transformers import AutoModelForCausalLM, AutoTokenizer

from trl import SFTTrainer, SFTConfig

tokenizer = AutoTokenizer.from_pretrained(self.config.model_name)

if tokenizer.pad_token is None:

tokenizer.pad_token = tokenizer.eos_token

model = AutoModelForCausalLM.from_pretrained(

self.config.model_name,

torch_dtype=torch.float16,

device_map="auto"

)

dataset = self.prepare_conversations(conversations)

def formatting_func(example):

return tokenizer.apply_chat_template(

example["messages"],

tokenize=False

)

sft_config = SFTConfig(

output_dir=self.config.output_dir,

num_train_epochs=self.config.num_epochs,

per_device_train_batch_size=self.config.batch_size,

learning_rate=self.config.learning_rate,

max_seq_length=self.config.max_length

)

trainer = SFTTrainer(

model=model,

args=sft_config,

train_dataset=dataset,

tokenizer=tokenizer,

formatting_func=formatting_func

)

trainer.train()

trainer.save_model()Evaluation and Metrics

from dataclasses import dataclass, field

from typing import Any, Optional

from abc import ABC, abstractmethod

@dataclass

class EvaluationResult:

"""Evaluation result."""

metric: str

score: float

details: dict = field(default_factory=dict)

class Evaluator(ABC):

"""Abstract evaluator."""

@abstractmethod

def evaluate(

self,

predictions: list[str],

references: list[str]

) -> EvaluationResult:

"""Evaluate predictions."""

pass

class PerplexityEvaluator(Evaluator):

"""Evaluate perplexity."""

def __init__(self, model: Any, tokenizer: Any):

self.model = model

self.tokenizer = tokenizer

def evaluate(

self,

predictions: list[str],

references: list[str]

) -> EvaluationResult:

"""Calculate perplexity on references."""

import torch

import math

total_loss = 0

total_tokens = 0

self.model.eval()

with torch.no_grad():

for text in references:

inputs = self.tokenizer(text, return_tensors="pt")

inputs = {k: v.to(self.model.device) for k, v in inputs.items()}

outputs = self.model(**inputs, labels=inputs["input_ids"])

total_loss += outputs.loss.item() * inputs["input_ids"].size(1)

total_tokens += inputs["input_ids"].size(1)

perplexity = math.exp(total_loss / total_tokens)

return EvaluationResult(

metric="perplexity",

score=perplexity

)

class RougeEvaluator(Evaluator):

"""Evaluate with ROUGE scores."""

def evaluate(

self,

predictions: list[str],

references: list[str]

) -> EvaluationResult:

"""Calculate ROUGE scores."""

from rouge_score import rouge_scorer

scorer = rouge_scorer.RougeScorer(['rouge1', 'rouge2', 'rougeL'])

scores = {

'rouge1': [],

'rouge2': [],

'rougeL': []

}

for pred, ref in zip(predictions, references):

result = scorer.score(ref, pred)

for key in scores:

scores[key].append(result[key].fmeasure)

avg_scores = {k: sum(v) / len(v) for k, v in scores.items()}

return EvaluationResult(

metric="rouge",

score=avg_scores['rougeL'],

details=avg_scores

)

class ExactMatchEvaluator(Evaluator):

"""Evaluate exact match accuracy."""

def __init__(self, normalize: bool = True):

self.normalize = normalize

def evaluate(

self,

predictions: list[str],

references: list[str]

) -> EvaluationResult:

"""Calculate exact match accuracy."""

correct = 0

for pred, ref in zip(predictions, references):

if self.normalize:

pred = pred.strip().lower()

ref = ref.strip().lower()

if pred == ref:

correct += 1

accuracy = correct / len(predictions) if predictions else 0

return EvaluationResult(

metric="exact_match",

score=accuracy,

details={"correct": correct, "total": len(predictions)}

)

class LLMJudgeEvaluator(Evaluator):

"""Use LLM as judge for evaluation."""

def __init__(self, llm_client: Any):

self.llm = llm_client

async def evaluate_async(

self,

predictions: list[str],

references: list[str],

instructions: list[str] = None

) -> EvaluationResult:

"""Evaluate using LLM as judge."""

scores = []

for i, (pred, ref) in enumerate(zip(predictions, references)):

instruction = instructions[i] if instructions else "Answer the question."

prompt = f"""Rate the quality of the response on a scale of 1-5.

Instruction: {instruction}

Expected response: {ref}

Actual response: {pred}

Consider: accuracy, completeness, and relevance.

Score (just the number 1-5):"""

response = await self.llm.complete(prompt)

try:

score = int(response.content.strip())

scores.append(score)

except ValueError:

scores.append(3) # Default to middle

avg_score = sum(scores) / len(scores) if scores else 0

return EvaluationResult(

metric="llm_judge",

score=avg_score / 5, # Normalize to 0-1

details={"raw_scores": scores}

)

def evaluate(

self,

predictions: list[str],

references: list[str]

) -> EvaluationResult:

"""Sync wrapper."""

import asyncio

return asyncio.run(self.evaluate_async(predictions, references))

class EvaluationSuite:

"""Run multiple evaluations."""

def __init__(self, evaluators: list[Evaluator]):

self.evaluators = evaluators

def evaluate(

self,

predictions: list[str],

references: list[str]

) -> dict[str, EvaluationResult]:

"""Run all evaluations."""

results = {}

for evaluator in self.evaluators:

result = evaluator.evaluate(predictions, references)

results[result.metric] = result

return results

def summary(self, results: dict[str, EvaluationResult]) -> str:

"""Generate summary report."""

lines = ["Evaluation Results:", "=" * 40]

for metric, result in results.items():

lines.append(f"{metric}: {result.score:.4f}")

if result.details:

for k, v in result.details.items():

lines.append(f" {k}: {v}")

return "\n".join(lines)Production Fine-Tuning Service

from fastapi import FastAPI, HTTPException, BackgroundTasks

from pydantic import BaseModel

from typing import Optional, Any

import asyncio

import uuid

app = FastAPI()

class TrainingJob:

"""Training job state."""

def __init__(self, job_id: str, config: dict):

self.id = job_id

self.config = config

self.status = "pending"

self.progress = 0

self.metrics = {}

self.error = None

# Job storage

jobs: dict[str, TrainingJob] = {}

class CreateJobRequest(BaseModel):

model_name: str

training_data_path: str

output_dir: str

use_lora: bool = True

lora_r: int = 8

num_epochs: int = 3

batch_size: int = 4

learning_rate: float = 2e-4

class JobResponse(BaseModel):

job_id: str

status: str

progress: float

metrics: dict

async def run_training(job: TrainingJob):

"""Run training job."""

try:

job.status = "running"

# Simulate training progress

for epoch in range(job.config["num_epochs"]):

job.progress = (epoch + 1) / job.config["num_epochs"]

job.metrics["epoch"] = epoch + 1

job.metrics["loss"] = 1.0 / (epoch + 1) # Simulated

await asyncio.sleep(1) # Would be actual training

job.status = "completed"

job.progress = 1.0

except Exception as e:

job.status = "failed"

job.error = str(e)

@app.post("/v1/jobs")

async def create_job(

request: CreateJobRequest,

background_tasks: BackgroundTasks

) -> JobResponse:

"""Create training job."""

job_id = str(uuid.uuid4())

job = TrainingJob(

job_id=job_id,

config=request.model_dump()

)

jobs[job_id] = job

# Start training in background

background_tasks.add_task(run_training, job)

return JobResponse(

job_id=job_id,

status=job.status,

progress=job.progress,

metrics=job.metrics

)

@app.get("/v1/jobs/{job_id}")

async def get_job(job_id: str) -> JobResponse:

"""Get job status."""

if job_id not in jobs:

raise HTTPException(status_code=404, detail="Job not found")

job = jobs[job_id]

return JobResponse(

job_id=job.id,

status=job.status,

progress=job.progress,

metrics=job.metrics

)

@app.delete("/v1/jobs/{job_id}")

async def cancel_job(job_id: str) -> dict:

"""Cancel training job."""

if job_id not in jobs:

raise HTTPException(status_code=404, detail="Job not found")

job = jobs[job_id]

job.status = "cancelled"

return {"status": "cancelled"}

@app.get("/v1/jobs")

async def list_jobs() -> list[JobResponse]:

"""List all jobs."""

return [

JobResponse(

job_id=job.id,

status=job.status,

progress=job.progress,

metrics=job.metrics

)

for job in jobs.values()

]

@app.get("/health")

async def health():

return {"status": "healthy"}References

- LoRA Paper: https://arxiv.org/abs/2106.09685

- QLoRA Paper: https://arxiv.org/abs/2305.14314

- PEFT Library: https://github.com/huggingface/peft

- TRL Library: https://github.com/huggingface/trl

Conclusion

Fine-tuning is the bridge between general-purpose LLMs and specialized applications. Start with data quality—a small dataset of high-quality examples often outperforms a large dataset of mediocre ones. Use LoRA or QLoRA for most use cases; they achieve comparable results to full fine-tuning at a fraction of the compute cost, and the adapter weights are easy to swap and version. Choose your target modules carefully; for most transformer models, targeting the attention projections (q, k, v, o) provides the best balance of capability and efficiency. Set your rank (r) based on task complexity—r=8 works for simple adaptations, while r=32 or higher may be needed for complex domain shifts. Always hold out evaluation data and monitor for overfitting; fine-tuned models can memorize training data quickly. For instruction tuning, format consistency matters—use the same prompt template during training and inference. Evaluate beyond loss metrics; task-specific evaluation and human judgment reveal issues that perplexity cannot. In production, version your adapters alongside your training data and evaluation results so you can reproduce and compare experiments. The key insight is that fine-tuning is an iterative process—start simple, evaluate thoroughly, and refine based on real-world performance rather than training metrics alone.

Discover more from C4: Container, Code, Cloud & Context

Subscribe to get the latest posts sent to your email.